LeNet5实现-numpy

使用numpy实现LeNet-5网络,参考toxtli/lenet-5-mnist-from-scratch-numpy模块化网络层

完整代码:zjZSTU/PyNet

卷积层

前向传播过程中各变量大小变化如下:

| 变量 | 大小 |

|---|---|

| input | [N,C,H,W] |

| a | [N*out_h*out_w, C*filter_h*filter_w] |

| W | [C*filter_h*filter_w, filter_num] |

| b | [1, filter_num] |

| z | [N*out_h*out_w, filter_num] |

| output | [N, filter_num, out_h,out_w] |

有以下注意:

需要将输入参数

input转换成2维行向量矩阵a1

im2row_indices(x, field_height, field_width, padding=1, stride=1)

需要将

2维矩阵z转换成4维数据体output1

conv_fc2output(inputs, batch_size, out_height, out_width):

反向传播过程中各变量梯度大小变化如下:

| 变量 | 大小 |

|---|---|

| doutput | [N, filter_num, out_h,out_w] |

| dz | [N*C*out_h*out_w, filter_num] |

| dW | [filter_h*filter_w, filter_num] |

| db | [1, filter_num] |

| da | [N*out_h*out_w, C*filter_h*filter_w] |

| dinput | [C,N,H,W] |

有以下注意:

需要将

4维输入doutput转换成2维梯度矩阵da1

conv_output2fc(inputs):

需要将

2维梯度矩阵da转换成4维梯度数据体dinput1

row2im_indices(rows, x_shape, field_height=3, field_width=3, padding=1, stride=1, isstinct=False):

池化层

池化层和卷积层的最大差别在于每次池化层操作仅对单个激活图进行

前向传播过程中各变量大小变化如下:

| 变量 | 大小 |

|---|---|

| input | [N, C, H, W] |

| a | [N*C*out_h*out_w, filter_h*filter_w] |

| z | [N*C*out_h*out_w] |

| onput | [N, C, out_h, out_w] |

有以下注意:

需要将输入参数

input转换成2维行向量矩阵a1

pool2row_indices(x, field_height, field_width, stride=1):

需要将

1维矩阵z转换成4维数据体output1

pool_fc2output(inputs, batch_size, out_height, out_width):

反向传播过程中各变量大小变化如下:

| 变量 | 大小 |

|---|---|

| doutput | [N, C, out_h, out_w] |

| dz | [N*C*out_h*out_w] |

| da | [N*C*out_h*out_w, filter_h*filter_w] |

| dinput | [N, C, H, W] |

有以下注意:

需要将

4维输入doutput转换成1维矩阵dz1

pool_output2fc(inputs):

需要将

2维梯度矩阵da转换成4维梯度数据体dinput1

row2pool_indices(rows, x_shape, field_height=2, field_width=2, stride=2, isstinct=False):

层定义

基本层定义分3部分功能:

- 初始化

- 前向传播

- 反向传播

1 | class Layer(metaclass=ABCMeta): |

对于有参数的层额外实现更新参数、获取参数和设置参数函数

1 | def update(self, lr=1e-3, reg=1e-3): |

层实现代码: PyNet/nn/layers.py

网络定义

网络实现以下功能:

- 初始化

- 前向传播

- 反向传播

- 参数更新

- 获取参数

- 设置参数

1 | class Net(metaclass=ABCMeta): |

网络实现代码: PyNet/nn/nets.py

LeNet-5定义如下

1 | class LeNet5(Net): |

保存和加载参数

将参数保存成文件,同时能够从文件中加载参数,使用python的pickle模块,参数以字典形式保存

1 | def save_params(params, path='params.pkl'): |

完整代码: PyNet/nn/net_utils.py

mnist数据

将mnist数据集下载解压后,加载过程中完成以下步骤:

- 转换成

(32,32)大小 - 转换维数顺序:

[H, W, C] -> [C, H, W]

完整代码: PyNet/src/load_mnist.py

加载完成后还需要进行数据标准化,因为图像取值为[0,255],参考pytorch使用,简易操作如下:

1 | # 标准化 |

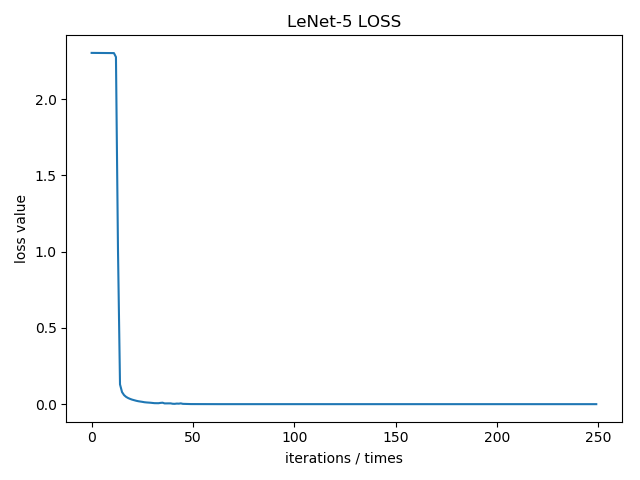

LeNet-5训练

训练参数如下:

- 学习率

lr = 1e-3 - 正则化强度

reg = 1e-3 - 批量大小

batch_size = 128 - 迭代次数

epochs = 1000

1 | net = LeNet5() |

完整代码: PyNet/src/lenet-5_test.py

训练结果

训练时间

| 计算机硬件 | 单次迭代时间 |

|:——————————————–: |:————: |

| 12核 Intel(R) Core(TM) i7-8700 CPU @ 3.20GHz | 约166秒 |

训练结果

| 训练集精度 | 测试集精度 |

|:———-: |:———-: |

| 99.99% | 99.04% |