Tool&Function Calling

概述

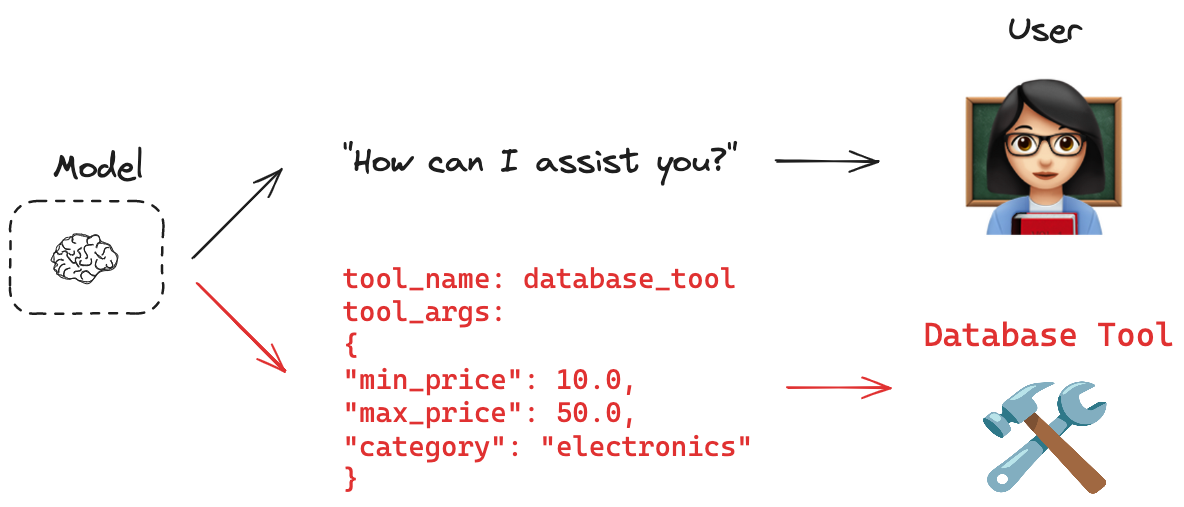

Function Calling 是 OpenAI 最早提出的一项重要功能,旨在让大型语言模型(LLM)能够调用外部工具或服务,后续其他LLM厂商都逐渐支持这项功能。其实现方式是:通过定义一组标准化的 JSON 格式接口,使模型能够理解并生成对特定函数的调用请求。这些外部工具可以包括网页搜索、数据库查询、文件解析等通用服务,也可以是用户自定义的业务逻辑函数。

借助 Function Calling,LLM 可以将原本无法处理的任务“委托”给相应的工具执行,从而显著提升其实际应用能力和推理深度。这种机制不仅增强了模型的实用性,也为构建基于 LLM 的复杂系统提供了良好的扩展基础。

在后续的发展中,这一功能逐渐被更广泛地称为

Tool Calling,两者语义等价。

Function calling

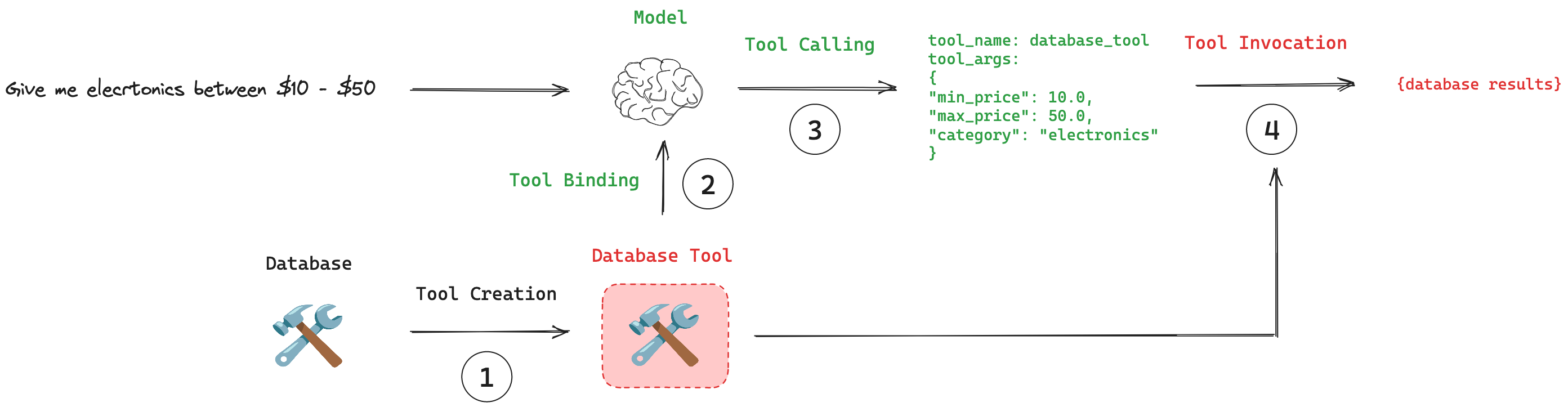

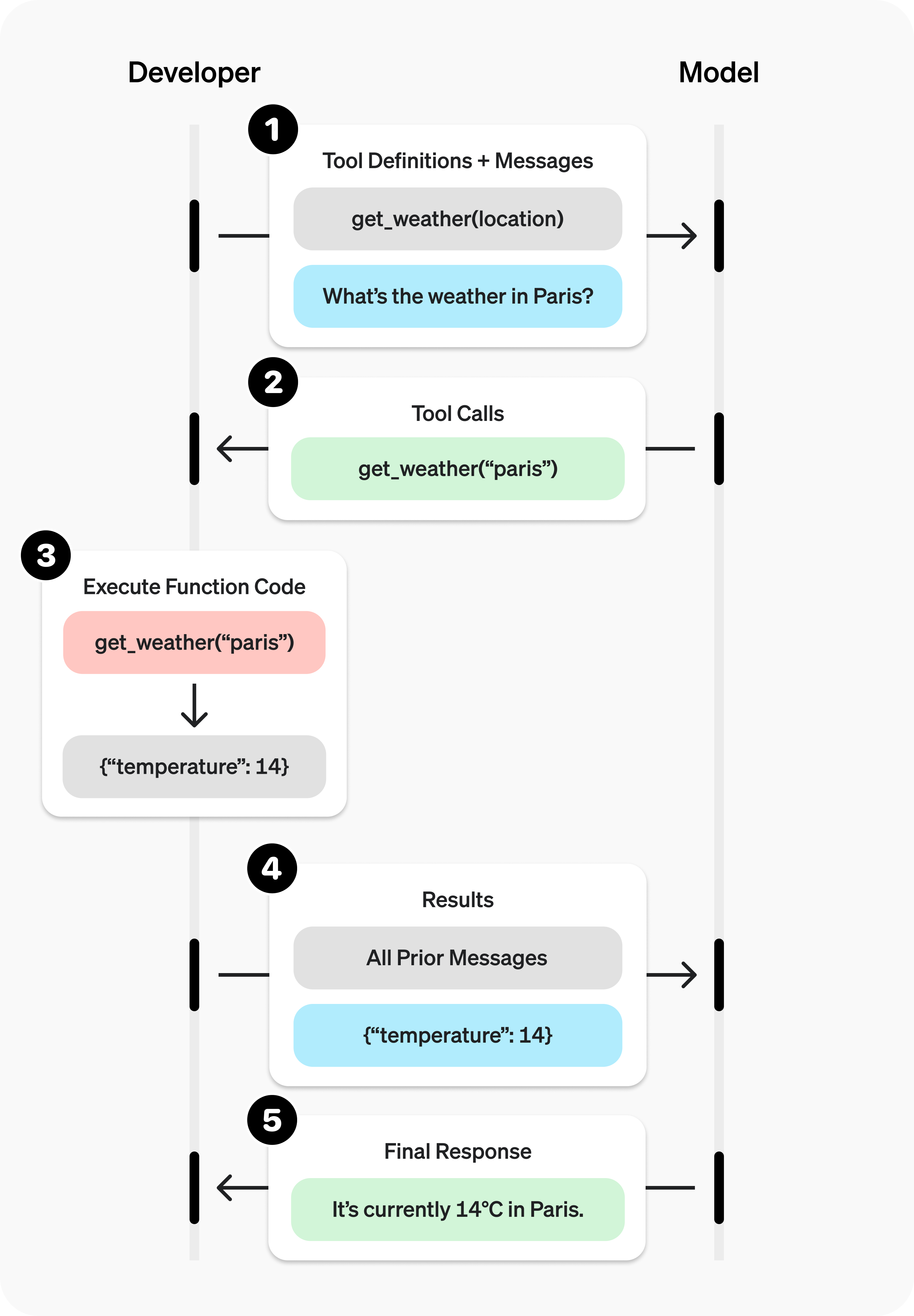

对于 Function calling 的使用流程,通常可以划分为以下五个步骤:

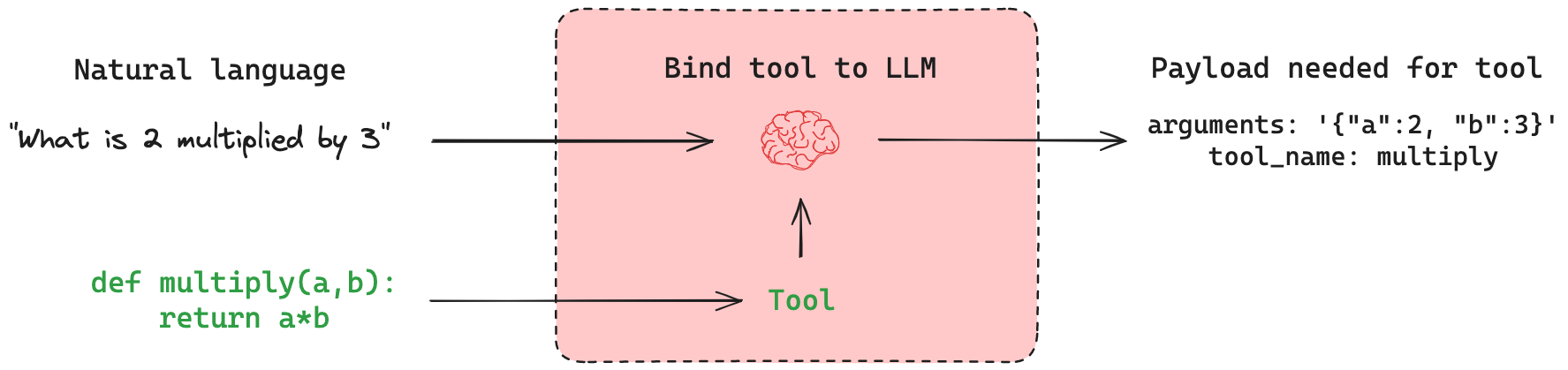

- 定义可用工具:在向 LLM 发送请求前,需在请求参数中明确声明可供调用的工具及其功能描述,使模型了解它可以借助哪些外部能力来完成任务;

- LLM 生成工具调用指令:模型在理解用户问题后,结合已知的可用工具,决定是否需要调用某个或多个工具,并输出对应的工具名称及参数;

- 执行工具调用:根据模型返回的调用信息,系统实际调用相应的外部工具(如 API、数据库查询等),并传入指定参数以获取真实数据;

- 注入工具结果至上下文:将工具返回的结果整合进原始对话上下文中,作为新的输入再次传递给 LLM,供其进行进一步的理解和推理;

- LLM 生成最终自然语言响应:模型基于用户的原始提问和工具返回的数据,生成最终的自然语言回复,完成整个交互过程。

注意:一次完整的

Function calling流程包含两次 LLM 推理调用:

- 第一次调用:输入为用户提问 + 可用工具描述,输出为模型希望调用的工具及参数。

- 第二次调用:输入为用户提问 + 工具返回的实际结果,输出为最终面向用户的自然语言回答。

这一流程构成了 Function calling 的完整交互闭环,使得语言模型能够在自身能力之外,借助外部工具动态接入实时、外部的数据与服务,从而实现更强大和实用的功能。

第一步,定义函数get_weather,输入精度和维度就可以获取该地区的气温,并且定义好该函数的调用格式

1 | import requests |

第二步:调用大模型,输入查询内容和可供调用工具

1 | import litellm |

第三步:解析大模型输出的结果

1 | response_message = response.choices[0].message |

根据解析结果,调用相应的外部工具

1 | import json |

第四步/第五步:输入提问结合外部工具获取的结果,进行第二次大模型计算,生成最终结果

1 | second_response = litellm.completion( |

Parallel function calling

在某些高级应用场景中,大型语言模型(LLM)可以在一次推理过程中并行地调用多个工具函数。传统上,模型在处理需要外部数据的任务时,会按顺序依次调用函数,这可能导致较高的延迟。而LLM接口(如 OpenAI 的 tool_call 机制)支持在单次响应中返回多个函数调用指令,从而实现并行执行,显著缩短整体响应时间。

考虑以下用户输入:

“今天旧金山、东京和巴黎的天气分别怎么样?”

这是一个典型的多目标查询,要求模型获取三个不同城市的实时天气信息。为了高效完成该请求,LLM 可以在单次回复中并行调用三次 get_weather 函数,每个调用对应一个城市的位置参数。

1 | # INPUT MESSAGES |