动手实现YOLO5Face

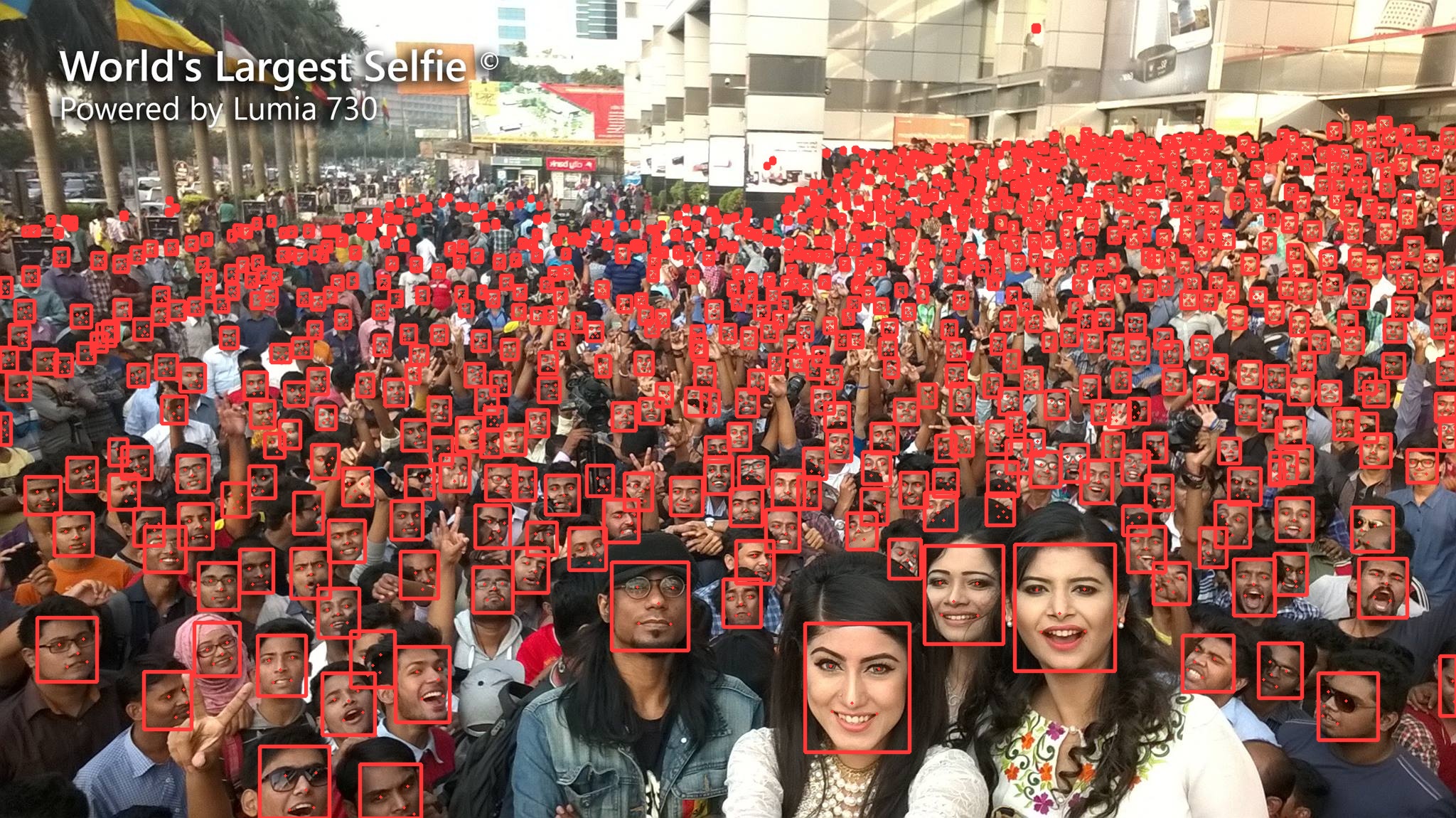

YOLO5Face可以理解为YOLOv5的扩展版本,它在原有的基础上添加了一个专门用于检测面部特征点的HEAD。这使得 YOLO5Face 在实时推理过程中,不仅能高效地检测出人脸的边界框,还能同时精确定位出人脸上的五个关键特征点坐标。这种一体化的设计大大简化了人脸识别与分析的工作流程,为后续的应用(如面部表情识别、姿态估计等)提供更丰富的信息支持。

概述

官方代码deepcam-cn/yolov5-face参考的是ultralytics/yolov5之前版本的实现,为了更好的利用yolov5最新的算法和训练实现,我尝试着在ultralytics/yolov5 v7.0上集成YOLO5Face,主要有以下几个模块的修改,分别是模型实现、图像预处理、损失函数以及数据后处理。

- 论文解读:YOLO5Face: Why Reinventing a Face Detector

- 自定义实现:zjykzj/YOLO5Face

- 数据集:WIDER FACE: A Face Detection Benchmark

模型实现

在论文描述中,YOLO5Face在模型方面主要有5点优化:

- 新建

StemBlock替换原先的输入层Focus; - 新建

CBS block替换原先的CSP block(C3); - 使用更小的

kernel size (3/5/7)优化SPP模块(原先是5/9/11); - 使用SPP模块处理过的卷积特征作为P6输出层(可选);

- 在最后输出层增加Landmark HEAD,用于检测人脸关键点坐标。

从YOLO5Face官网最新实现来看,第2点已经取消,仍旧使用C3模块进行特征提取;另外,参考yolov5-v7.0的实现,已经使用单纯的Conv层替换了Focus模块,以及使用SPPF模块替换了SPP模块。所以综合上述情况,我在yolov5-v7.0版本的基础上进行了两点修改:

- 在SPPF模块中使用更小的kernel size进行池化操作(

SPPF(k=3) == SPP(k=(3, 5, 7)); - 在最后的输出层增加Landmark HEAD实现。

具体配置文件如下,以yolov5s_v7_0.yaml为例:

1 | # YOLOv5 🚀 by Ultralytics, GPL-3.0 license |

对比了YOLOv5n算法在SPPF(k=5)与SPPF(k=3)两种配置下于WIDER FACE数据集上的表现,发现两者精度相近。因此,最终选择了YOLOv5模型的默认配置(SPPF(k=5))。

| 模型 | Easy Val AP | Medium Val AP | Hard Val AP |

|---|---|---|---|

| YOLOv5n with SPPF(k=5) | 0.92797 | 0.90772 | 0.80272 |

| YOLOv5n with SPPF(k=3) | 0.92628 | 0.90603 | 0.80403 |

SPP vs. SPPF

SPP模块最初源自SPPNet分类模型,该模型采用多级池化结构以确保无论输入图像的尺寸如何,都能获得相同大小的输出特征向量。在YOLOv3中,SPP模块首次被引入至目标检测网络,作为Backbone层和Neck层之间的组件,旨在利用不同内核大小的池化层捕获多尺度特征(注:输出特征向量的空间大小仍旧和输入特征保持一致)。随后,在YOLOv5中,SPP模块被作者进一步优化为SPPF模块,和SPP模块具有相同的计算结果,同时加快了推理速度。

1 | # Profile |

对于SPPF模块,SPPF(k=5) == SPP(k=(5, 9, 13)以及SPPF(k=3) == SPP(k=(3, 5, 7)

1 | class SPP(nn.Module): |

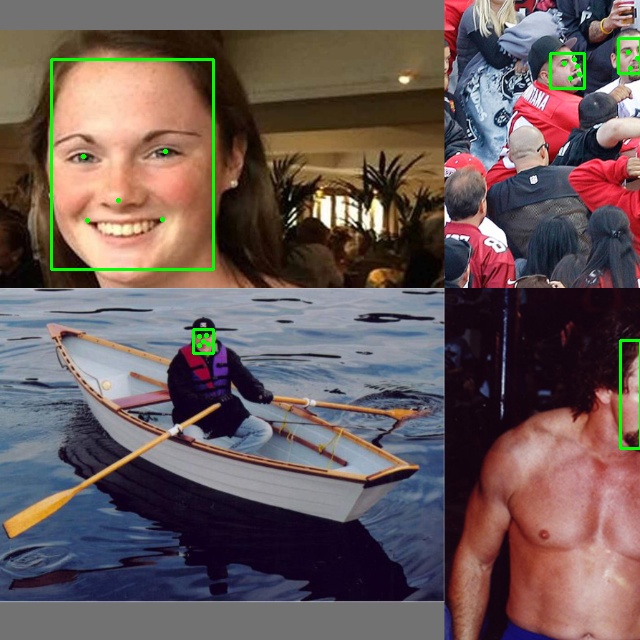

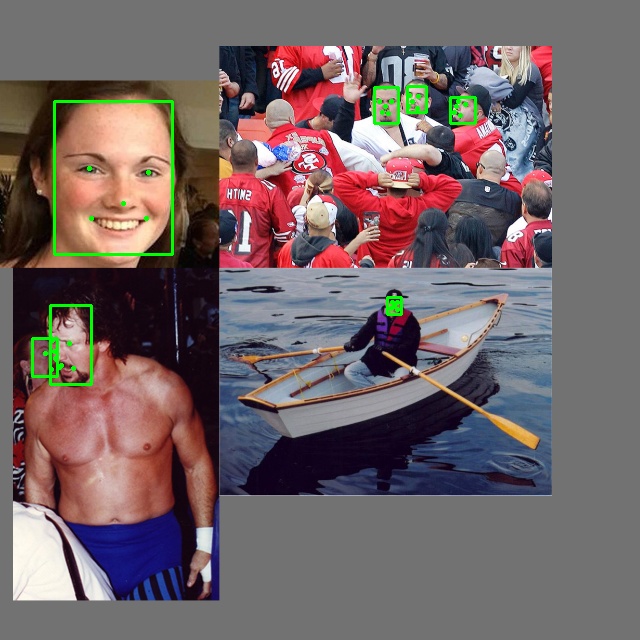

FaceDetect

对于YOLOv5的输出层(Detect模块),最终输出的卷积特征大小是(bs, na, fw, fh, o_dim),

bs表示批量大小na表示该层锚点个数,默认是3;fw/fh表示卷积特征空间大小;o_dim表示xy/wh/conf/probs输出,默认是xy=2, wh=2, conf=1, n_classes=80(以COCO数据集为例),所以o_dim长度是2+2+1+80=85。

对于YOLO5Face而言,人脸目标即通用目标,所以仅需额外增加10个关键点坐标的预测即可,最终o_dim由xy/wh/conf/probs/landmarks构成,o_dim长度是2+2+1+80+10=95。人脸目标和关键点的计算公式如下:

$$

xy=(sigmoid(xy)*2+grid_i-0.5)*stride_i\ \ wh=(sigmoid(wh)2)^2anchor_i

$$

$$

probs=sigmoid(probs)\ \ landmarks=landmarksanchor_i+grid_istride_i

$$

xy表示预测框中心点坐标,使用sigmoid函数进行归一化后结合网格坐标和步长放大到输入图像大小;wh表示预测框宽高,使用sigmoid函数进行归一化后集合步长和锚点框大小放大到输入图像大小;probs使用BCSLoss进行训练,所以仅需对每个分类item进行归一化操作即可得到分类概率;landmarks坐标的计算参考了预测框的实现,它结合了网格坐标、步长和锚点框大小进行计算。

注意:上面公式中anchor_i已经经过了步长放大,否则计算公式中wh和landmarks坐标的计算可以修改为:

$$

wh=(sigmoid(wh)2)^2anchor_i*stride_i

$$

1 | class FaceDetect(nn.Module): |

图像预处理

YOLO5Face把人脸抽象成对象,所以yolov5工程中的图像预处理算法都可以作用于人脸数据。另外,YOLO5Face增加了对人脸关键点的检测,所以人脸数据不仅仅包含人脸边界框,还拥有人脸关键点坐标,需要对原先的图像预处理算法进行改造,同步处理人脸边界框和人脸关键点坐标。虽然在yolov5-v7.0工程中,对于Nano和Small模型使用配置文件hyp.scratch-low.yaml,对于其他更大的模型使用hyp.scratch-high.yaml进行训练;对于自定义的YOLO5Face工程,统一使用hyp.scratch-low.yaml,所以在图像预处理方面,涉及到3方面的改造:

- 标签文件的加载和保存

- 人脸边界框和人脸关键点的坐标变换

- 图像预处理算法

标签文件

原先的yolov5工程的标签文件格式如下:

1 | # cls_id x_center y_center box_w box_h |

YOLO5Face在每个边界框增加了5个人脸关键点坐标,格式如下:

1 | # cls_id x_center y_center box_w box_h kp1_x kp1_y kp2_x kp2_y kp3_x kp3_y kp4_x kp4_y kp5_x kp5_y |

注1:这5个人脸关键点分别是左眼、右眼、鼻头、左嘴角、右嘴角。

注2:如果因为遮挡、过小等因素没有标注出人脸关键点,使用-1.0进行标识。

在yolov5工程中涉及到标签文件的读取和解析实现是verify_image_label函数,

1 | def verify_image_label(args): |

坐标变换

在图像预处理过程中,坐标格式会在xywhn和xyxy之间进行转换,这里面涉及到两个坐标变换函数:

xywhn2xyxyxyxy2xywhn

在处理边界框坐标的过程中,还需要考虑到关键点坐标的变换:

1 | labels = self.labels[index].copy() |

图像预处理

涉及到以下几个图像预处理函数(注意:仅考虑配置文件hyp.scratch-low.yaml):

- mosaic

- random_perspective

- letterbox

- fliplr

马赛克

简单描述下yolov5工程中马赛克预处理函数的操作流程:

- 初始化设置

- 创建结果图像

img4,大小为模型输入大小的2倍(img_size*2, img_size*2),默认填充值是114; - 随机创建马赛克中心点

(xc, yc),取值范围是[img_size // 2, img_size + img_size //2];

- 创建结果图像

- 随机遍历4张图像

- 第一张图像赋值到

img4的左上角,截取的是原图的右下角内容; - 第二张图像赋值到

img4的右上角,截取的是原图的左下角内容; - 第三张图像赋值到

img4的左下角,截取的是原图的右上角内容; - 第四张图像赋值到

img4的右下角,截取的是原图的左上角内容; - 注意:每张子图具体截取大小取决于马赛克中心点。最理想情况下中心点位于图像

img4的中心(此时(xc, yc) == (img_size, img_size));

- 第一张图像赋值到

- 随机透视

- 将结果图像

img4进行透视变换,最终得到模型指定的输入大小(img_size, img_size)。

- 将结果图像

mosaic函数中涉及到坐标变换的工作有两部分:

- 将原先经过归一化缩放的标注框和关键点坐标转换到裁剪后的坐标系大小;

- 在随机透视函数中进行标注框和关键点的坐标转换。

1 | def load_mosaic(self, index): |

随机透视

yolov5工程的随机透视函数random_perspective执行以下几何变换操作:

中心化 (Center):将图像中心移到坐标系原点;透视变换 (Perspective):在x和y轴方向上添加随机的透视效果;旋转和缩放 (Rotation and Scale):- 随机选择一个角度范围内的旋转值,并对图像进行旋转

- 随机选择一个缩放比例,并应用到图像上

剪切 (Shear):在x和y方向上分别添加一个随机的剪切角度;平移 (Translation):图像在x和y方向上进行随机平移。

如果不执行透视变换 (Perspective)操作,也就是只需要进行仿射变换时,即旋转、缩放、剪切和平移等线性变换时,使用函数cv2.warpAffine;否则,使用cv2.warpPerspective。

1 | def random_perspective(im, |

等比填充

在图像经过等比填充后,会对边界框坐标进行转换,这一过程也需要对关键点坐标进行转换。

1 | # Letterbox |

左右翻转

对人脸边界框进行左右翻转,需要考虑到人脸关键点中左右眼和左右嘴角的变换:

1 | # Flip left-right |

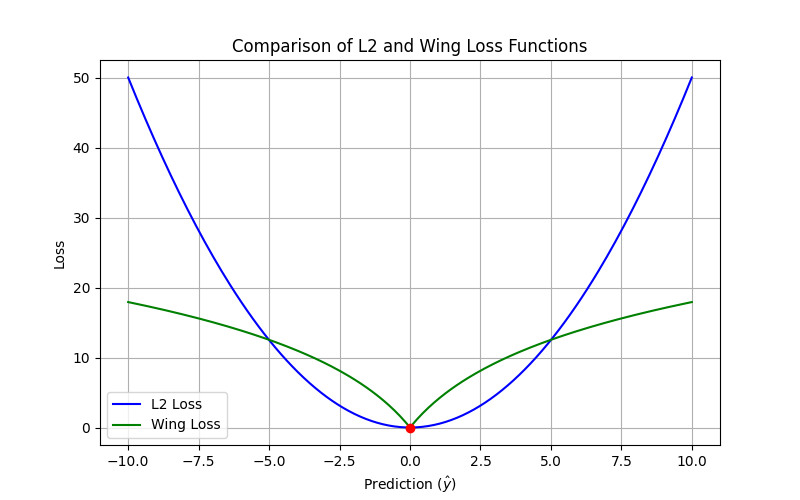

损失函数

对于损失函数,主要在yolov5 loss的基础上增加两部分内容:一是确定landmarks的坐标计算方式;一是确定landmarks的损失计算方式。论文参考了预测框的计算方式,结合锚点和网格点来计算人脸关键点坐标。

另外,论文最终选择了Wing-loss,在预测坐标和真值坐标之间的误差较小时使用对数函数,在误差较大时使用线性函数计算损失值,这样可以减少异常值的影响同时保持损失函数对小误差的敏感度。

1 | class WingLoss(nn.Module): |

数据后处理

对于数据后处理函数non_max_suppression,其主要功能是通过置信度阈值来过滤预测框,然后通过IOU阈值过滤相同类别中低置信度的预测框。这个过程不直接涉及人脸关键点的计算,但在过滤预测框的过程中需要特别注意保存和更新人脸关键点的坐标,确保在保留预测框的同时,对应的关键点坐标也被正确保存,而在过滤掉预测框时,相应的关键点坐标也应被丢弃。

小结

YOLO5Face是一个非常优秀的人脸检测框架,它不仅结合了YOLO系列算法的优势,还额外实现了人脸关键点(Landmarks)的计算。该框架基于YOLOv5这一成熟的目标检测模型,进一步提升了人脸检测的精度和效率。随着YOLO系列算法的不断发展,我们可以期待未来会有更多改进版本,如YOLO6Face、YOLO7Face、YOLO8Face等。